Robots.txt Generator | Generate Complete Robots.txt Online

What is a Robots.txt Generator Free Tool?

A robots.txt generator is a valuable online tool that assists website owners in creating a robots.txt file—a fundamental text file used for communicating with search engine crawlers and other web robots. Located in the root directory of your website, this file contains specific instructions detailing which pages or directories these crawlers can access and which they should avoid. By carefully managing these instructions, website owners can effectively control the indexing and crawling behavior of search engines, optimizing their web presence.

The purpose of the robots.txt file is straightforward: it specifies directives for user agents (crawlers), enhancing the management of a website’s content. This not only aids in ensuring that the bots prioritize relevant content but also assists in preserving the integrity and speed of a website. Including directives like crawl delay can further fine-tune the interaction between crawlers and the website, allowing for a smoother browsing experience for users.

Additionally, the robots.txt file is instrumental in mitigating potential issues regarding sensitive information or duplicate content. It can help keep search engines from indexing pages that might negatively affect a website’s SEO performance.

Why is the Robots.txt File Important?

Understanding the importance of the robots.txt file is crucial for anyone who owns or manages a website. Here are a few reasons why this file is indispensable:

– Improved Crawl Efficiency: The robots.txt file allows you to delineate which pages should be crawled. This optimizes crawling resources and ensures that search engine bots are focused on content that adds value to your website. Consequently, this leads to better utilization of server resources and enhances the overall site speed.

– Enhanced Privacy and Security: When sensitive or confidential information exists on your website, the robots.txt file can help prevent it from being indexed by search engine crawlers. This is particularly beneficial for sites that handle personal data or proprietary information.

– Better SEO Performance: By blocking crawlers from indexing low-quality or duplicate content, you can improve your website’s search engine rankings. This targeted approach enables you to maintain a professional and SEO-friendly online presence.

– Faster Website Speed: By limiting unnecessary requests to your server, the robots.txt file can contribute to faster loading times, thereby improving user experience. An optimized website tends to retain visitors longer, leading to better engagement rates.

Overall, the robots.txt file is an essential tool for effectively managing how search engines interact with your website. It can enhance crawl efficiency, bolster privacy and security, improve SEO, and increase overall site performance.

How Does the Robots.txt File Work?

The functionality of the robots.txt file is based on a set of instructions that search engine crawlers adhere to when visiting your site. Here’s a step-by-step breakdown of how the process works:

1. Identification: When a search engine crawler visits your website, it first looks for the robots.txt file located in the root directory. This is the standardized location where crawlers expect to find crawling directives.

2. Instruction Reading: Upon locating the robots.txt file, the crawler reads the instructions contained within it. These directives inform the crawler whether it should access specific pages or directories.

3. Crawling Actions: Based on the directives, crawlers either crawl or ignore the specified content. For instance, if your robots.txt file includes the following line:

“`

User-agent:

Disallow: /private/

“`

This means that all web robots are instructed not to crawl any pages or directories under /private/.

Understanding Keywords and User Agents

The robots.txt file can also specify certain user agents, which are the names assigned to different crawlers. This allows website owners to issue directives tailored to specific bots. For example, if you want to block Googlebot specifically from accessing a part of your website, your instructions might look like this:

“`

User-agent: Googlebot

Disallow: /no-google/

“`

This flexibility empowers website owners to manage how different search engines interact with their content more precisely.

How to Create a Robots.txt File

Creating a robots.txt file is a straightforward process. Here are detailed steps to guide you:

1. Choose a Text Editor: You can use any simple text editor such as Notepad, TextEdit, or even coding software to create your file.

2. Name Your File: Save the new document with the name “robots.txt” ensuring that the filename is exact. It must be all lowercase and without any additional extensions.

3. Draft Your Directives: Enter the appropriate directives for your website, based on your specific needs. For example:

“`

User-agent:

Disallow: /private/

Allow: /public/

Crawl-delay: 10

“`

4. Upload the File: Place the robots.txt file in the root directory of your website. This directory is usually accessible via your web hosting service.

5. Testing: After uploading, verify that the file works correctly through tools such as Google Search Console’s Robots.txt Tester.

Utilizing the Robots.txt Generator Free Tool

For those unfamiliar with coding or who want to simplify the process further, a robots.txt generator can be your best ally. This tool allows you to create a robots.txt file tailored specifically to your website’s needs without needing to manually code each directive.

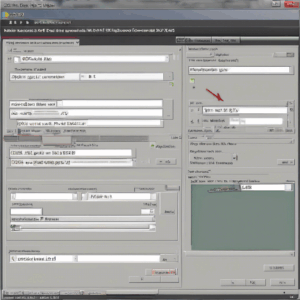

Step-by-Step Guide to Using the Robots.txt Generator

1. Access the Tool: Navigate to a reliable online robots.txt generator.

2. Select Options: The tool may offer several customizable options—such as specifying user agents, directives you wish to block, or those you want to allow. Not all options are mandatory, so choose based on your needs.

3. Generate the File: Once you have made your selections, simply click the Generate button. The tool will compile your selections into a robots.txt file format.

4. Review and Download: Before finalizing, review the generated content for any necessary adjustments. Once satisfied, download the generated file.

5. Implement the File: Upload the downloaded robots.txt file to the root directory of your website, as previously discussed.

Benefits of Using a Robots.txt Generator

– User-Friendly Interface: These generators often feature an intuitive interface that simplifies the file creation process, making it accessible even for those with minimal technical skills.

– Time-Saving: Rather than writing directives manually, a robots.txt generator allows swift assembly of necessary instructions.

– Error Reduction: Automated tools can help avoid common errors that could arise in hand-coded files, ensuring that the directives function correctly.

Conclusion

Utilizing a robots.txt generator is a vital step in managing a website’s interaction with search engines. This text file helps dictate which parts of your website are accessible to crawlers, allowing you to optimize for better visibility, speed, and security. Whether you’re a seasoned website owner or just getting started, understanding and effectively employing a robots.txt file can significantly enhance your website’s performance and SEO efforts. Take advantage of the tools available to streamline the creation of this important resource, ensuring that your website is crawling-friendly and search-engine ready.