Search Engine Crawler Simulator: Simulate Search Engine Crawler or Spider on Your Webpage

What is a Search Engine Crawler?

A search engine crawler, often referred to as a web spider or web robot, is an automated software program used by search engines to discover, crawl, and index webpages across the vast expanse of the internet. These crawlers play a crucial role in shaping how information is served to users during online searches.

When a crawler visits a webpage, it systematically follows hyperlinks to other pages, creating a recursive path that enables it to uncover and index new content continuously. As it journeys through the web, the crawler extracts critical elements from each page, including content and metadata. This metadata consists of vital components such as the page title, description, and relevant keywords, which are then stored in the search engine’s index.

Search engine crawlers rely on sophisticated algorithms to decide which pages to crawl and how frequently they revisit them. Several factors influence this crawling frequency, including:

– Quality and Quantity of Content: Pages rich in valuable, relevant content are often crawled more frequently.

– Inbound Links: A greater number of high-quality inbound links can signal to crawlers that a page is worth revisiting.

– Content Freshness: Newer content typically attracts crawlers more than older, stale content.

Through their meticulous work, search engine crawlers enable users to find the information they seek. They also offer critical insights to website owners and marketers, allowing them to optimize their online presence and boost visibility.

Why is Simulating a Search Engine Crawler Important?

Simulating a search engine crawler allows web developers and SEO specialists to understand how search engines interpret their content. This process, broadly termed Search Engine Spider Simulation, provides an artificial environment that mimics how a real crawler would read a webpage’s content.

Key Benefits of Simulating a Search Engine Crawler

1. Identifying Indexing Issues: By simulating the crawler, developers can pinpoint potential hurdles that could keep search engine crawlers from indexing their webpages effectively. For instance, they may find that certain multimedia elements, such as images or videos, are not being indexed or that the page is hindered by restrictions in the robots.txt file.

2. Enhancing SEO: Understanding the behavior and preferences of search engine crawlers can significantly impact how web developers optimize their content. They can identify missing keywords, ineffective meta tags, or slow loading times that may detract from their search rankings.

3. Testing Website Changes: Before implementing website updates, simulating a crawler’s journey can help ensure that changes will not adversely affect indexing or visibility on search engines. This proactive testing can save time and resources by preventing problems before they occur.

4. Troubleshooting Performance Issues: A simulation can expose various challenges related to website performance, crawlability, and indexing. It may reveal excessive 404 errors or indicate that the website is not user-friendly on mobile devices.

Ultimately, simulating a search engine crawler facilitates a better understanding of how to optimize content for the best performance in search rankings, ensuring that webpages are both crawlable and indexable.

The Role of a Search Engine Crawler Simulator

A search engine crawler simulator serves as an invaluable tool for web developers and SEO professionals. This free tool enables users to visualize crucial SEO elements, including word count, title and description tags, the frequency of keyword phrases, heading tags, outbound link text, and much more.

The simulator effectively sees a webpage just like search engines do, showing the content in a manner that the engines would recognize it. For example, it can display elements such as:

– Meta Title: The title that appears on search engine results.

– Meta Description: A brief summary of the page content that appears beneath the title in search results.

– Meta Keywords: Key terms relevant to the page content.

– Heading Tags (H1 to H4): These tags structure content and signal its hierarchy.

– Readable Text Content: The body copy that’s visible to visitors.

– Source Code: The underlying code that powers the webpage.

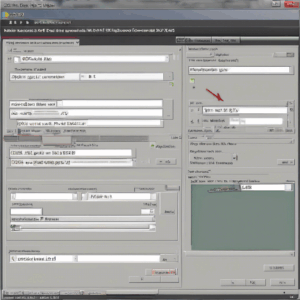

How to Use a Search Engine Crawler Simulator Tool

Using a search engine crawler simulator is straightforward and user-friendly. Follow these simple steps to assess your website’s visibility and readability from a search engine’s perspective:

1. Visit the Simulator Website: Start by navigating to the search engine crawler simulator’s homepage.

2. Enter the URL: Input the URL of the specific page you wish to analyze. This can be the homepage or any internal webpage.

3. Complete CAPTCHA: This verification step ensures that you are a human user and helps prevent automated submissions.

4. Submit for Analysis: Click on the submit button to process your request.

Once the analysis is complete, the tool will display vital information regarding the webpage, including:

– Meta Title

– Meta Description

– Meta Keywords

– H1 to H4 Tags

– Indexable Links

– Readable Text Content

– Source Code

Tools for Simulating Search Engine Crawlers

A variety of tools are available to simulate search engine crawlers effectively. Popular options include:

– Google Search Console: Offers a URL Inspection tool that allows site owners to see how Google views their pages.

– SEMrush: Provides a Site Audit feature, giving a comprehensive overview of site health, crawlability, and SEO performance.

– Screaming Frog SEO Spider: A downloadable tool that crawls websites’ URLs and fetches key onsite elements for SEO analysis.

These tools can identify common problems that could hinder a webpage’s indexing and visibility, allowing for necessary adjustments that improve overall site performance.

Conclusion: The Importance of Search Engine Crawlers

In the world of digital marketing and online content, understanding search engine crawlers is vital. The implementation of a search engine crawler simulator enables website owners and developers to ascertain how effectively their content can be crawled and indexed by search engines. Using this simulation not only aids in identifying potential indexing issues but also provides valuable insights for optimization, ultimately enhancing search engine ranking and visibility.

By taking the time to simulate a search engine crawler, you gain the necessary tools to refine your website, ensuring that it remains competitive in search engine results pages. Whether you’re troubleshooting existing content or strategizing for new projects, the insights gleaned from these simulations are indispensable for improved SEO performance. Thus, embracing the role of search engine crawlers and employing simulation tools stands as a best practice for any serious online presence.